Rather than just plotting routes, Google Maps now speaks more like a human, understands vague queries, and responds with information tailored to your journey. Whether it’s suggesting a restaurant along the way or warning of a crash ahead, the new version puts awareness and relevance at the center of the driving experience.

Unveiled quietly and gradually released across devices, this update comes at a time when users increasingly expect apps to adapt to real-world conditions in real time. The shift aligns with Google’s broader AI strategy: making tools that aren’t just functional, but conversational. The new version promises a smoother, less distracting navigation experience—especially in dense city environments or unfamiliar areas.

Gemini doesn’t just sit on top of Maps—it transforms its core logic. By linking Street View with massive location datasets and adding real-time voice capabilities, Google is presenting a version of Maps that behaves less like software and more like a person in the passenger seat.

Directions Guided by Landmarks, Not Measurements

One of the most practical changes is a new approach to how Google Maps gives directions. Instead of telling drivers to “turn in 500 feet,” it now uses visual references like shops or buildings along the route. “Turn right after the Thai Siam restaurant,” for example cited.

This system is designed to reduce cognitive load behind the wheel. According to Google, the feature relies on a cross-analysis of 250 million places combined with Street View data to highlight recognizable landmarks. By making directions more intuitive and visual, drivers are less likely to misjudge distances or take their eyes off the road to verify unclear turns.

Although this change was implemented with little fanfare, it marks a clear step away from abstract instructions toward cues people naturally understand. And it’s available now—users may have already noticed it without realizing the shift in logic behind the voice.

Gemini Brings a Human Tone to Map Interactions

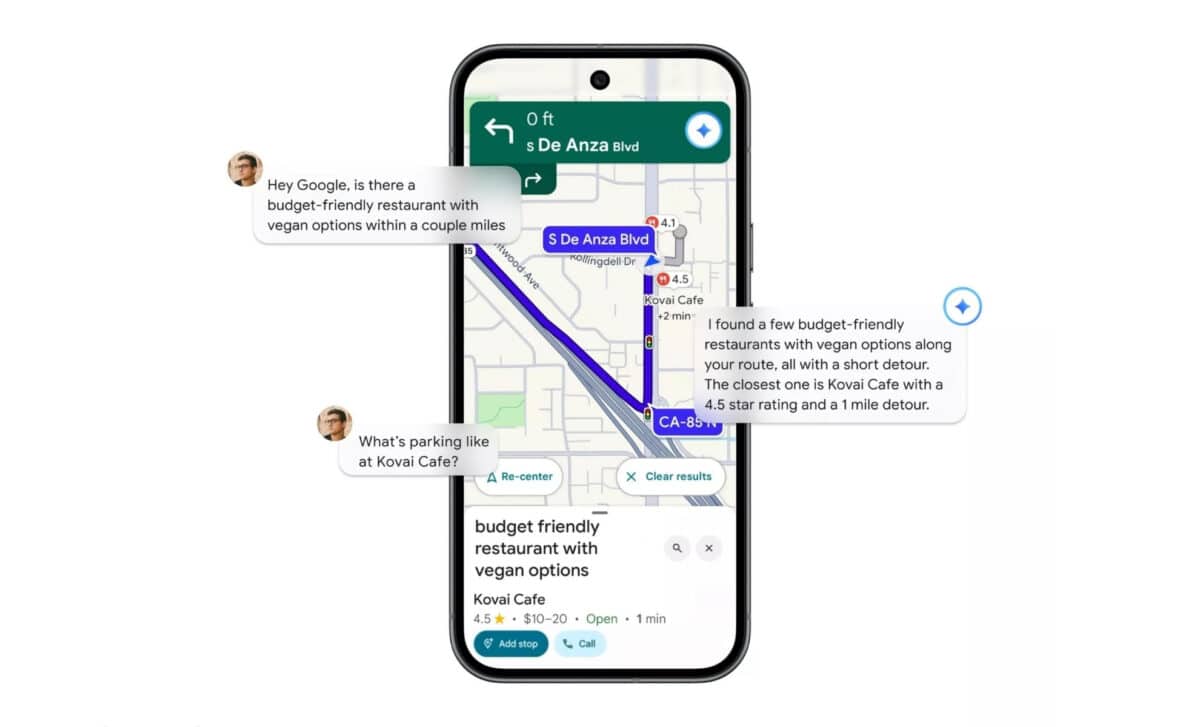

The core of this update is the integration of Gemini, Google’s generative AI model, into the Maps experience. This transforms the app from a command-response tool into a conversational assistant. Drivers can say things like, “Find a vegan restaurant along my route,” or “What’s parking like at my destination?” and receive complete, context-aware answers.

Describing the upgrade in a press briefing, Google likened the assistant to a “local expert in your front seat,” Carscoops reported. The AI draws from reliable web sources and Google’s own location data to produce responses that feel informed and relevant. That means it can suggest a garage, offer restaurant recommendations, or even report a traffic jam you spot—all through simple voice input.

Gemini also enables users to perform actions beyond navigation. You can ask about last night’s sports scores, check restaurant menus, or even add calendar appointments on the go. While Google claims hallucinations are unlikely, no technical breakdown of how that’s ensured was shared during the media briefing.

Proactive Traffic Alerts and Real-World Object Recognition

A subtle but helpful improvement is the rollout of proactive driving alerts, aimed at users not actively navigating. If Maps detects a crash or slowdown ahead, it now issues warnings even when navigation is off. These alerts appear as notifications, letting drivers react early to reroute and avoid delays.

This change reflects how people often drive on familiar routes without guidance, yet still need timely information. With proactive alerts, Google Maps bridges that gap, offering context-sensitive updates without requiring the user to input a destination.

Alongside this, the app’s Lens feature—also updated with Gemini—brings visual intelligence into play once the car is parked. By tapping the camera icon, users can point at buildings or businesses and ask questions like, “What is this place known for?” or “Is it good for solo diners?” The system delivers concise, aggregated responses using local data.

This new Lens experience is set to begin rolling out in the U.S. by the end of the month, and it’s designed to help users make sense of their surroundings more easily, especially in unfamiliar urban areas or while traveling.